Estimating Software Development

This is actually a huge topic so I am just going to touch on some high level points. What prompted me to start writing on this topic is a recent conversation with a client and a cartoon I came across at Gizmodo about what happens when your Boss Estimates Software.

The question of how long it will take to write a particular piece of software is dependent on several factors:

- what is your direct experience in writing that sort of software?

- is there existing code you can leverage? – Software Reuse

- buy versus build – can you buy a module?

- what is the Operating System? – an RTOS will usually slow things down

- is it a hard or easy problem?

- is it well defined?

- how will it be tested?

- what quality standards does it have to comply with – eg. IEC62304

- how many people will be working on it?

I’m sure you get the idea.

And the coding is just part of the Software Development Process. That is the thing that gets forgotten more than anything else.

Software Development Process

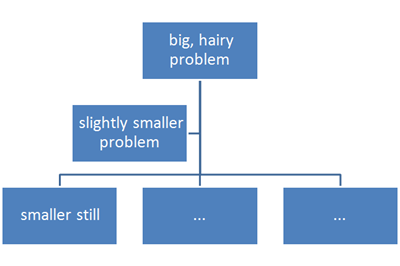

In the Software Development Process, coding is preceded by:

- user requirements analysis

- product requirements analysis

- technical analysis

- solution selection

- specification

- test methodology

- Software Design

Then there may be a Design Review.

Then we code (sometimes referred to as putting the bugs in).

Then we test and debug (getting the bugs out).

Then there may be a Code Review and Refactor followed by confirmation it still passes all the tests.

Then we complete the Software Documentation package and create a labelled revision so it can be properly released and tracked.

That is the small software team version of the process and for some projects some of those steps are trivial.

Larger companies have larger processes but can also do larger projects as a result.

Industry Metrics for Coding

Here are some really basic Coding Metrics.

High security, financial systems, mission critical code – as little as 10 lines of fully debugged and documented code per day averaged across the whole process.

Commercial and scientific software is usually created at a rate of between 100 and 1000 lines of code a day.

And better processes actually speed that up rather than slowing it down.

Estimating Software Development Time

A recent conversation with a client was on the topic of redoing someone else’s code. They had been working with another Software Development company and had decided that the code needed to be done again. They had spent two years without getting to a fully working version. My first though was “commendable patience”. My second was merely “ouch”!

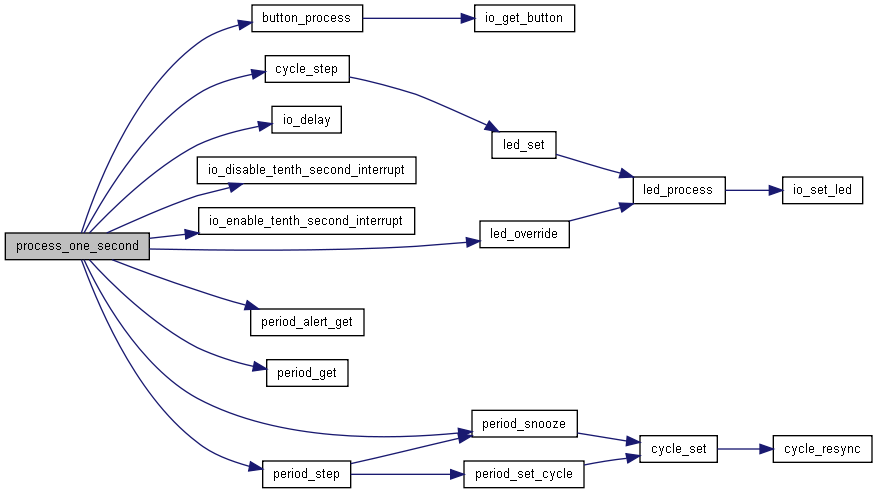

So we did some analysis. I was initially optimistic. We used a tool called RSM to do some code base analysis. We use quite a lot of science in our Software Development Process including Static Analysis, Code Quality Analysis and complexity measurement. What we got from the initial analysis was 50K lines of code with an average Cyclometric Complexity of 6.21. The normal rule of thumb is that anything above 5 should be redesigned. Not Good. Then we looked at some specific files that had really high complexity scores above 10. That was the clincher. No evidence of design, no consistency, lot’s of cut and paste and everything is global variables.

The good news, is that the real complexity of the required code will not require 50K lines of code when it is properly designed. The bad news is that our client was right. It did need to be done again from scratch. Some parts might be reusable but it was unlikely.

Assuming we can do it with 20K lines of code, this will take between 20 and 200 person days to produce. In our case closer to 20 person days because the thing that makes the biggest difference to software delivery on time is your Software Development Process.

So that is a really quick look at a really big topic.

Successful Endeavours specialise in Electronics Design and Embedded Software Development. Ray Keefe has developed market leading electronics products in Australia for nearly 30 years. This post is Copyright © 2014 Successful Endeavours Pty Ltd.