Big Data

You have probably heard the term Big Data by now. I certainly mentioned it in passing in my post on Information Overload. So I was amused to receive the final edition of IEEE Computing in Science & Engineering for 2011 with Big Data as the topic.

I learned a few more things about Big Data that I hadn’t considered up until now. There are:

- storing the data is a major issue

- moving the data between storage and processing is an even bigger issue

- processing capacity is increasing faster than storage or transport capacity

- for simulations, the results matrices are so huge that reducing them before storage is the only way they can be handled

An example where all these points converge is climate modelling where the exponential growth in sensors and the complexity of the models mean that there is too much data too widely dispersed to get it to one place, process it and get the results back out efficiently. A new methodology is required for problems like this.

IO Bottleneck

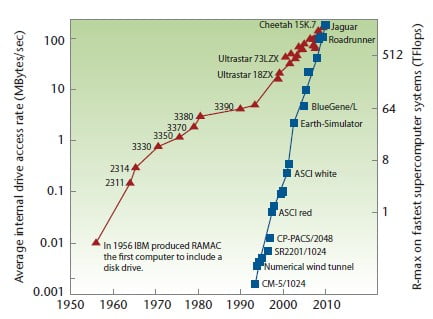

So we are back to the old information IO Bottleneck problem. The graph that really got my attention tracked the growth in data access rates versus the growth in data processing rates.

The rate of performance improvement in disks (red line) is much lower than that in computing systems (blue line), driving the need for larger disk counts in each generation of Supercomputer. This approach isn€™t sustainable regardless of whether you look at cost, power or reliability. Richard Freitas of IBM Almaden Research provided some of this data for IEEE.

So we have reached the point where the storage and movement of data is now the limiting factor in computing analysis. 40 years ago Seymour Cray had to overcome this at the individual computing system level to build the Supercomputers he is famous for. Today we have hit it at the system level.

Areas being looked at for innovative solutions are:

- continue looking for higher density and faster storage systems

- data compression or subsetting algorithms to reduce the amount of data to be moved or stored

- parallel processing techniques with parallel storage to reduce the bottleneck

- results summarisation so less storage is required for the analysis results

And all this while trying to maintain data integrity and traceability for proof of scientific rigour. Answers will be found, that much we can be sure of from history.

And there is a lot of money to be made from doing this well. Forbes put $50 Billion as the value of the Big Data Market.

Successful Endeavours specialise in Electronics Design and Embedded Software Development. Ray Keefe has developed market leading electronics products in Australia for nearly 30 years. This post is Copyright © 2012 Successful Endeavours Pty Ltd